We are watching the AI industry commit the original sin of the web all over again.

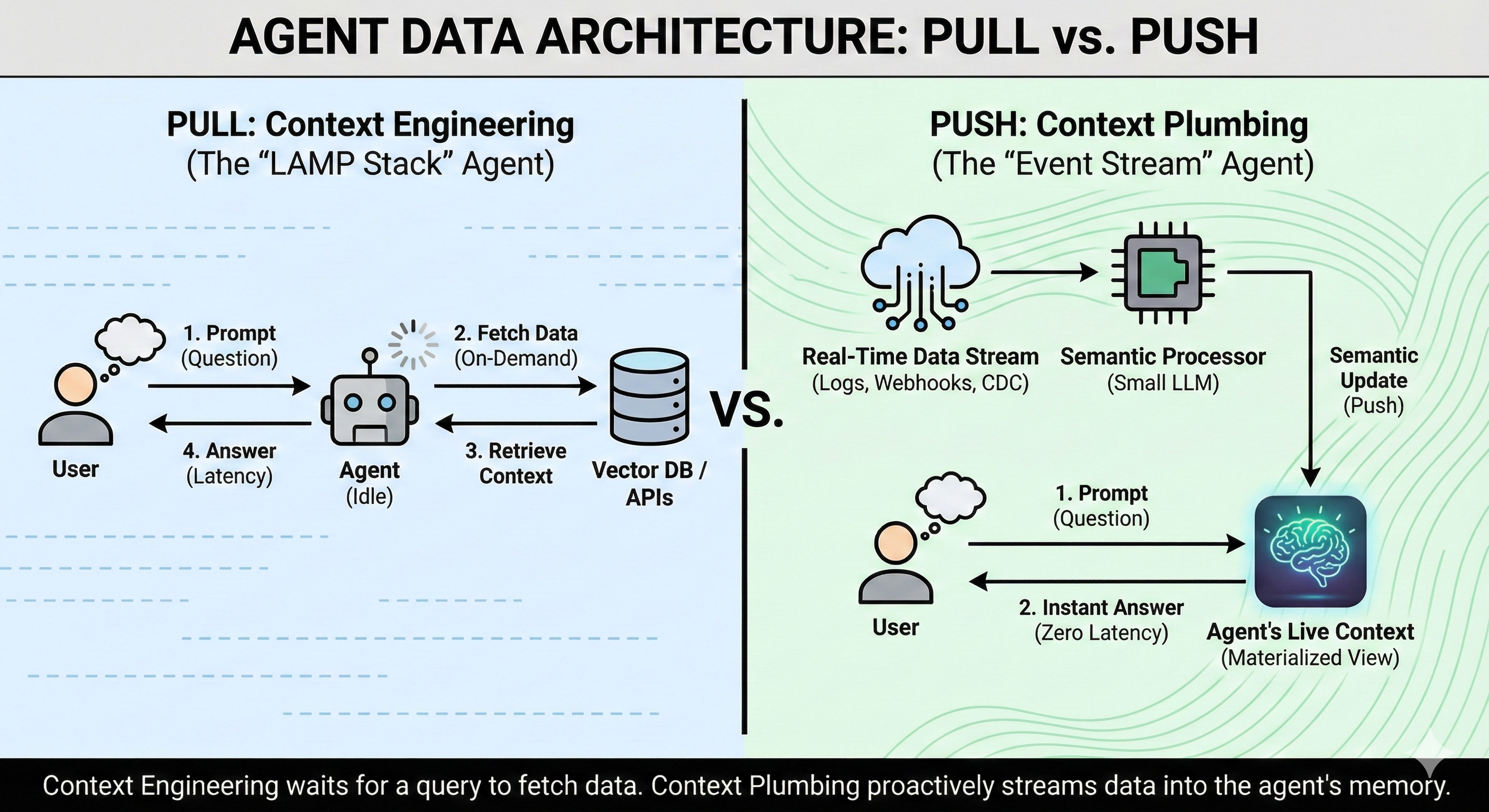

For the last two years, we’ve obsessed over Context Engineering, treating Agents like static, PHP-era websites. When a user asks a question, the system performs a “database fetch” on demand, pulling context just in time to generate an answer. We haven’t reinvented software; we’ve just replaced the mouse click with a prompt, keeping the same brittle, pull-based architecture underneath.

We are building the “LAMP Stack” for AI, where every interaction triggers a slow, synchronous query to the backend.

The web industry eventually learned that “Pull” doesn’t scale for rich experiences. We moved to Push. We adopted Event Sourcing, Stream Processing, and Materialized Views to ensure data was ready before the user asked for it.

To build Agents that actually work, we need to stop obsessing over the Prompt (the UI) and start laying the Context Plumbing (the infrastructure).

The Analogy: The Monolith vs. The Materialized View

To understand why current Agents feel slow and disjointed, look at the evolution of data architecture. We are re-living the shift from the Monolith to the Streaming Platform.

1. The “LAMP Stack” Agent (Context Engineering)

In the early web, everything was Request-Response.

- The Flow: User clicks a page $\rightarrow$ App queries the DB $\rightarrow$ App renders HTML $\rightarrow$ User sees page.

- The AI Equivalent: This is standard RAG (Retrieval Augmented Generation).

- User Prompts $\rightarrow$ Agent queries Vector DB $\rightarrow$ Agent stuffs Context Window $\rightarrow$ Agent answers.

- The Failure: This is Pull-based. The system is idle until the user acts. You cannot be proactive. You are limited by query latency.

2. The “Kappa Architecture” Agent (Context Plumbing)

As data scale exploded, we couldn’t query the raw DB at runtime anymore. We moved to Event Sourcing.

-

The Flow: Data flows into a Stream $\rightarrow$ Processors crunch it in real-time $\rightarrow$ State is saved to a Materialized View.

-

The AI Equivalent: This is Context Plumbing.

- The Stream: Business events (Slack messages, Git commits, Jira updates).

- The Processor: LLMs acting as ETL workers.

- The Materialized View: The Agent’s Context Window.

-

The Success: This is Push-based. We don’t “look up” the answer when the user asks. We have effectively pre-computed the answer.

-

The Shift: You need to treat the Agent’s Context Window not as a scratchpad, but as a Live Materialized View.

The Derivation: Refactoring for “Live State”

Let’s look at the technical refactor required to move from Pull to Push using a standard Customer Support Agent.

The Naive Approach: The Tool-Using “Pull” Agent

This is the standard architecture today (e.g., standard LangChain/AutoGPT).

Trigger: User types: “Where is my order?”

Execution:

- LLM pauses generation.

- LLM calls

get_order_status(user_id). - API returns JSON:

{id: 123, status: "shipped"}. - LLM resumes and answers.

The Failure Mode: The “Spinner Problem.”

If the order status changes while the user is typing, the Agent doesn’t know. It has no “Do What I Mean” (DWIM) intuition because it only knows what it explicitly fetches. It cannot interrupt you to say, “By the way, your package just arrived.”

The Refactor: The “Push” Architecture

We apply the “Materialized View” pattern. But there is a catch.

In traditional software, a materialized view is Structured JSON (for a React component).

In AI software, the materialized view must be Semantic Text (for an LLM).

The Semantic Pipeline:

- Event:

OrderShippedEventfires. - Semantic ETL: A small model (e.g., Llama-3-8b) reads the event and summarizes it: “The order #123 was shipped at 10 AM.”

- State Update: This sentence is pushed into the Agent’s System Prompt immediately.

Now, when the user types “Where is my…”, the Agent already knows the order is shipped. It doesn’t need a tool. It has “Intuition.”

The Implementation: The Enterprise Platform Spec

If you are building this as a platform, simply giving developers Kafka and Flink isn’t enough. The plumbing is standard, but the consumer is different.

Here is the 4-step pipeline for Context Plumbing:

1. Ingest (The Wire)

Standard Event-Driven Architecture. Hook into webhooks, CDC streams, or logs.

Input: {"event": "comment_added", "text": "This code is messy", "author": "Dave"}

2. Process (The Semantic ETL)

This is the “AI Twist.” In a standard data pipe, you map fields. In Context Plumbing, you use a small, cheap LLM as a Summarizer.

Task: “Convert this raw JSON event into a memory update.”

Transformation: The small model outputs: “Dave commented that the code is messy.”

3. Store (The Live Context Object)

You don’t just append this to a log; you manage it like a cache.

Constraint: The Context Window is expensive and finite (e.g., 128k tokens).

Logic: You need an Eviction Policy.

- TTL (Time-To-Live): “Dave’s comment is irrelevant after 24 hours. Delete it.”

- Summarization: “Combine the last 5 comments into one summary.”

4. Serve (Just-In-Time Injection)

When the inference request comes in, you don’t fetch data. You simply inject the current state of the “Live Context Object” directly into the System Message.

# The Agent is "Stateful" because the infrastructure handles the state.

system_prompt = f"""

You are a helpful assistant.

CURRENT REALITY SNAPSHOT (Updated 2ms ago):

- Active Jira Ticket: PROJ-123 (Blocked)

- Latest Team Sentiment: Frustrated by code quality (Dave)

- System Status: All Systems Green

"""

The Builder’s Takeaway

We are moving from an era of Construction (building the perfect prompt at request time) to an era of Flow (managing the stream of state).

Context Engineering is the “LAMP Stack.” It assumes the world waits for you to ask.

Context Plumbing is the “Event Stream.” It assumes the world moves fast, and the Agent needs to keep up.

The novelty isn’t in the LLM. The novelty—and where you can sell a platform—is in the adapter at the end of the pipe that turns “Database Events” into “Agent Memories.”